Monte Carlo Methods

Monte Carlo methods require only experience - sample sequences of states, actions, and rewards from actual or simulated interaction with an environment but no complete knowledge of environment.

Only on the completion of an episode are value estimates and policies changed. Monte Carlo methods can thus be incremental in an episode-by-episode sense, but not in a step-by-step (online) sense.

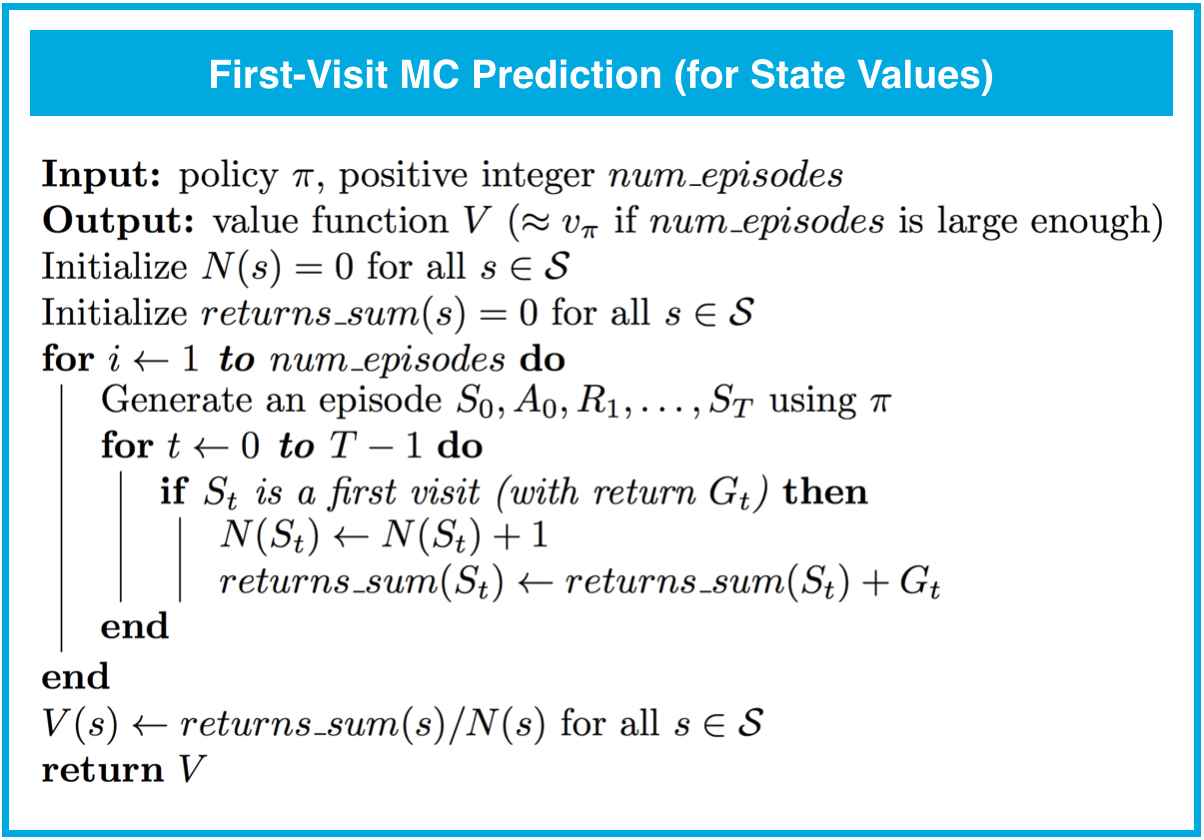

The first-visit MC method estimates as the average of the returns following first visits to , whereas the every-visit MC method averages the returns following all visits to .

- Every-visit MC is biased, whereas first-visit MC is unbiased.

- Initially, every-visit MC has lower mean squared error (MSE), but as more episodes are collected, first-visit MC attains better MSE.

Both the first-visit and every-visit method are guaranteed to converge to the true value function, as the number of visits to each state approaches infinity.

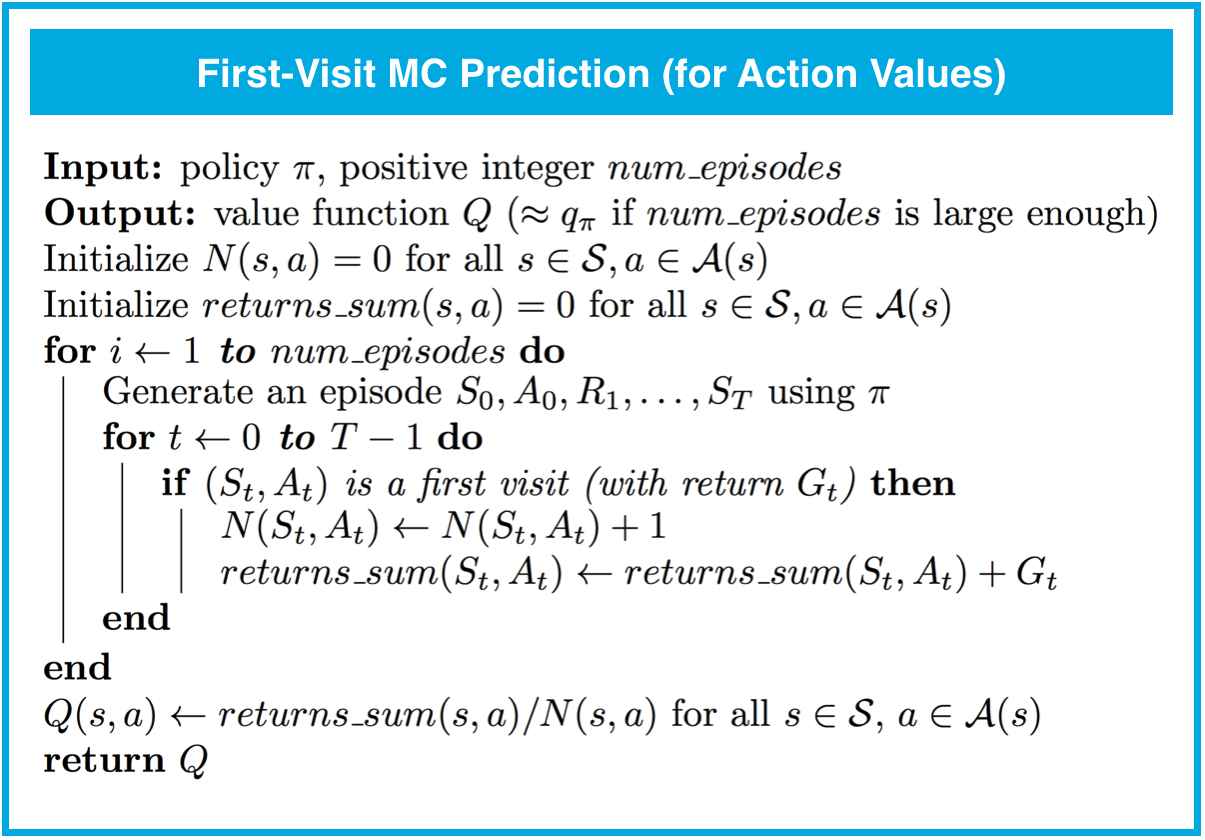

MC Prediction (Action Values)

If a model is not available, then it is particularly useful to estimate action values (the values of state–action pairs) rather than state values.

With a model, state values alone are sufficient to determine a policy; one simply looks ahead one step and chooses whichever action leads to the best combination of reward and next state.

We won't use MC prediction to estimate the action-values corresponding to a deterministic policy; this is because many state-action pairs will never be visited (since a deterministic policy always chooses the same action from each state). Instead, so that convergence is guaranteed, we will only estimate action-value functions corresponding to policies where each action has a nonzero probability of being selected from each state.

Exploration-Exploitation Dilemma

At every time step, when the agent selects an action, it bases its decision on past experience with the environment. And, towards minimizing the number of episodes needed to solve environments in OpenAI Gym, our first instinct could be to devise a strategy where the agent always selects the action that it believes (based on its past experience) will maximize return. With this in mind, the agent could follow the policy that is greedy with respect to the action-value function estimate. We examined this approach in a previous video and saw that it can easily lead to convergence to a sub-optimal policy.

To see why this is the case, note that in early episodes, the agent's knowledge is quite limited (and potentially flawed). So, it is highly likely that actions estimated to be non-greedy by the agent are in fact better than the estimated greedy action

With this in mind, a successful RL agent cannot act greedily at every time step (that is, it cannot always exploit its knowledge); instead, in order to discover the optimal policy, it has to continue to refine the estimated return for all state-action pairs (in other words, it has to continue to explore the range of possibilities by visiting every state-action pair). That said, the agent should always act somewhat greedily, towards its goal of maximizing return as quickly as possible. This motivated the idea of an -greedy policy.

We refer to the need to balance these two competing requirements as the Exploration-Exploitation Dilemma. One potential solution to this dilemma is implemented by gradually modifying the value of when constructing -greedy policies.

Setting the Value of , in Theory

It makes sense for the agent to begin its interaction with the environment by favoring exploration over exploitation. After all, when the agent knows relatively little about the environment's dynamics, it should distrust its limited knowledge and explore, or try out various strategies for maximizing return. With this in mind, the best starting policy is the equiprobable random policy, as it is equally likely to explore all possible actions from each state. You discovered in the previous quiz that setting yields an -greedy policy that is equivalent to the equiprobable random policy.

At later time steps, it makes sense to favor exploitation over exploration, where the policy gradually becomes more greedy with respect to the action-value function estimate. After all, the more the agent interacts with the environment, the more it can trust its estimated action-value function. You discovered in the previous quiz that setting yields the greedy policy (or, the policy that most favors exploitation over exploration).

Thankfully, this strategy (of initially favoring exploration over exploitation, and then gradually preferring exploitation over exploration) can be demonstrated to be optimal.

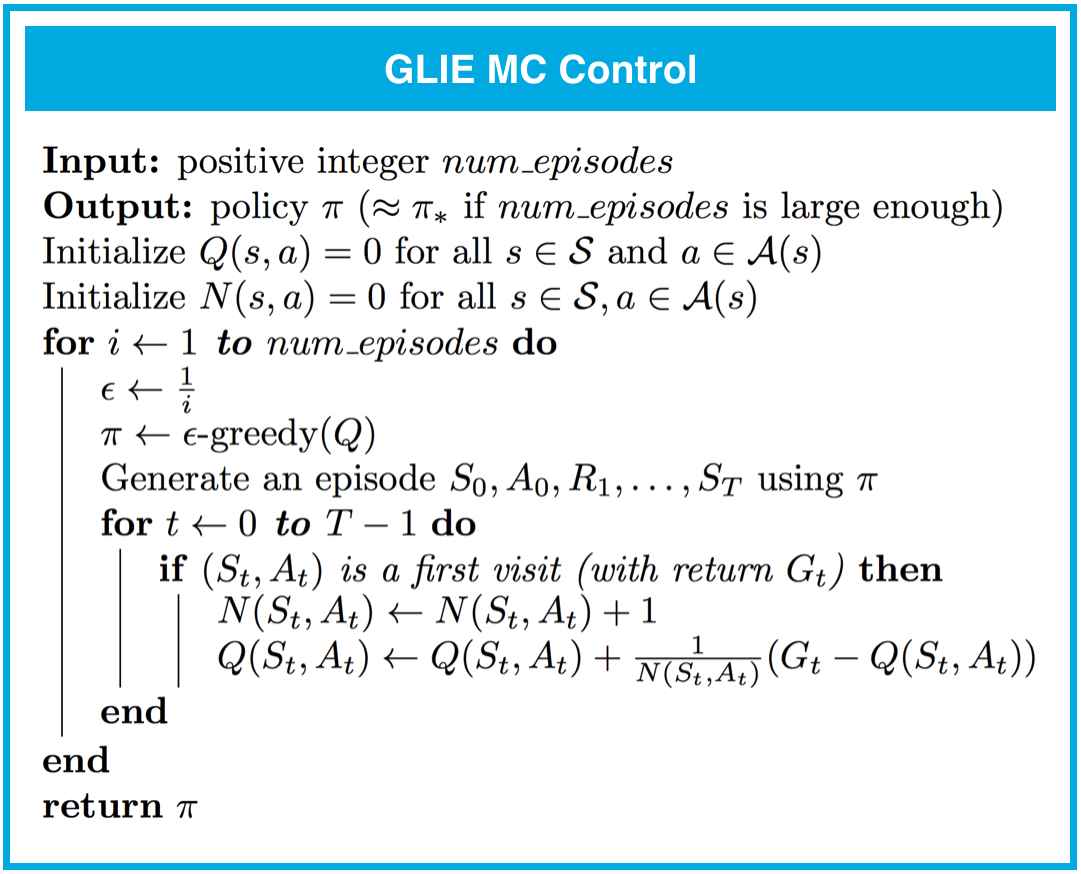

GLIE

In order to guarantee that MC control converges to the optimal policy , we need to ensure that two conditions are met. We refer to these conditions as Greedy in the Limit with Infinite Exploration (GLIE). In particular, if:

- every state-action pair , (for all ) is visited infinitely many times, and

- the policy converges to a policy that is greedy with respect to the action-value function estimate ,

then MC control is guaranteed to converge to the optimal policy (in the limit as the algorithm is run for infinitely many episodes). These conditions ensure that:

- the agent continues to explore for all time steps, and

- the agent gradually exploits more (and explores less).

One way to satisfy these conditions is to modify the value of when specifying an -greedy policy. In particular, let correspond to the -th time step. Then, both of these conditions are met if:

- for all time steps , and

- decays to zero in the limit as the time step approaches infinity (that is, )

For example, to ensure convergence to the optimal policy, we could set (You are encouraged to verify that for all , and

Setting the Value of , in Practice

As you read in the above section, in order to guarantee convergence, we must let decay in accordance with the GLIE conditions. But sometimes "guaranteed convergence" isn't good enough in practice, since this really doesn't tell you how long you have to wait! It is possible that you could need trillions of episodes to recover the optimal policy, for instance, and the "guaranteed convergence" would still be accurate!

Even though convergence is not guaranteed by the mathematics, you can often get better results by either: - using fixed , or - letting decay to a small positive number, like 0.1.

This is because one has to be very careful with setting the decay rate for ; letting it get too small too fast can be disastrous. If you get late in training and is really small, you pretty much want the agent to have already converged to the optimal policy, as it will take way too long otherwise for it to test out new actions!

MC Control: GLIE

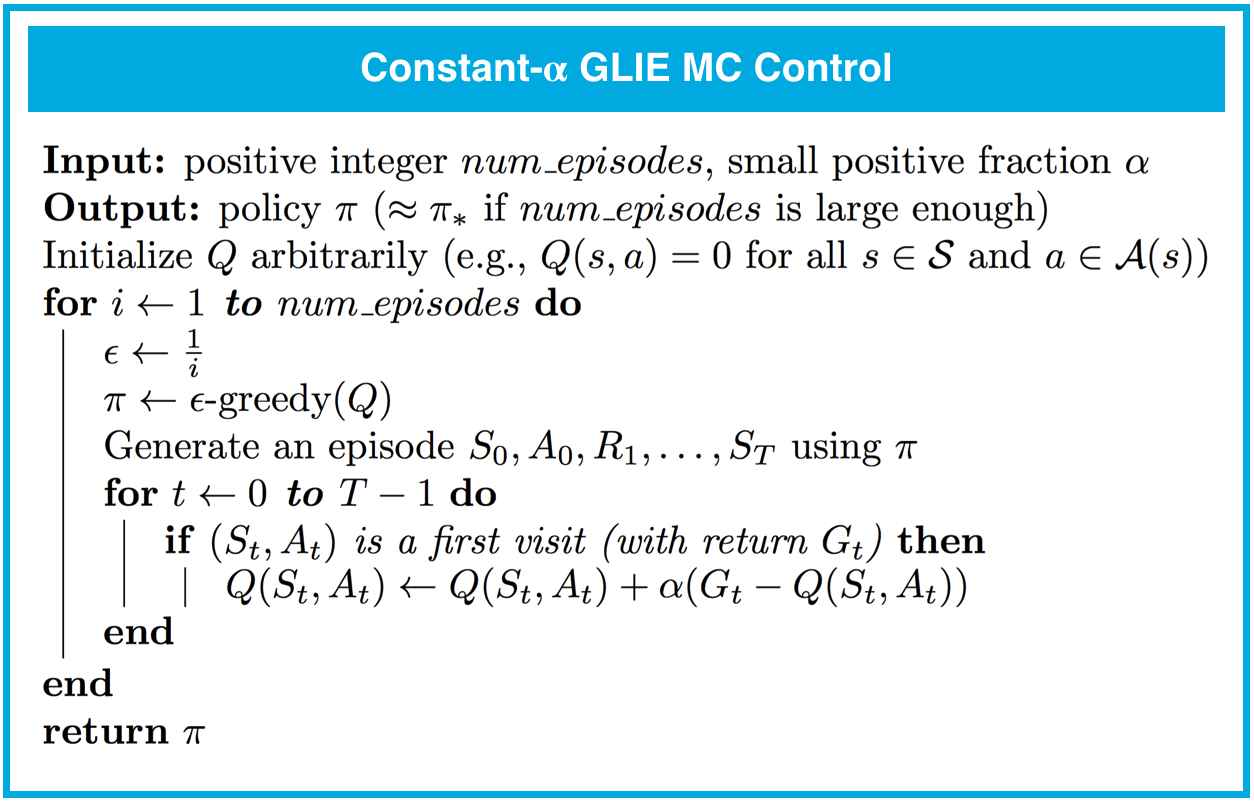

MC Control: Constant-alpha

When we adapted running_mean algorithm for Monte Carlo control in the following concept (MC Control: Policy Evaluation), the sequence corresponded to returns obtained after visiting the same state-action pair.

That said, the sampled returns (for the same state-action pair) likely corresponds to many different policies. This is because the control algorithm proceeds as a sequence of alternating evaluation and improvement steps, where the policy is improved after every episode of interaction. In particular, we discussed that returns sampled at later time steps likely correspond to policies that are more optimal.

With this in mind, it made sense to amend the policy evaluation step to instead use a constant step size, which we denoted by . This ensures that the agent primarily considers the most recently sampled returns when estimating the action-values and gradually forgets about returns in the distant past.

Setting the Value of

forgetful_mean function is closely related to the Evaluation step in constant- MC control.

How to set the value of when implementing constant- MC control:

- You should always set the value for to a number greater than zero and less than (or equal to) one.

- If , then the action-value function estimate is never updated by the agent.

- If , then the final value estimate for each state-action pair is always equal to the last return that was experienced by the agent (after visiting the pair).

- Smaller values for encourage the agent to consider a longer history of returns when calculating the action-value function estimate. Increasing the value of ensures that the agent focuses more on the most recently sampled returns.

Note that it is also possible to verify the above facts by slightly rewriting the update step as follows:

where it is now more obvious that \alphaα controls how much the agent trusts the most recent return over the estimate constructed by considering all past returns.

IMPORTANT NOTE: It is important to mention that when implementing constant- MC control, you must be careful to not set the value of too close to 1. This is because very large values can keep the algorithm from converging to the optimal policy . However, you must also be careful to not set the value of too low, as this can result in an agent who learns too slowly. The best value of for your implementation will greatly depend on your environment and is best gauged through trial-and-error.