Temporal Difference Methods

Temporal Difference (TD) Method start by focusing on the policy evaluation or prediction problem, the problem of estimating the value function for a given policy . For the control problem (finding an optimal policy), DP, TD, and Monte Carlo methods all use some variation of generalized policy iteration (GPI).

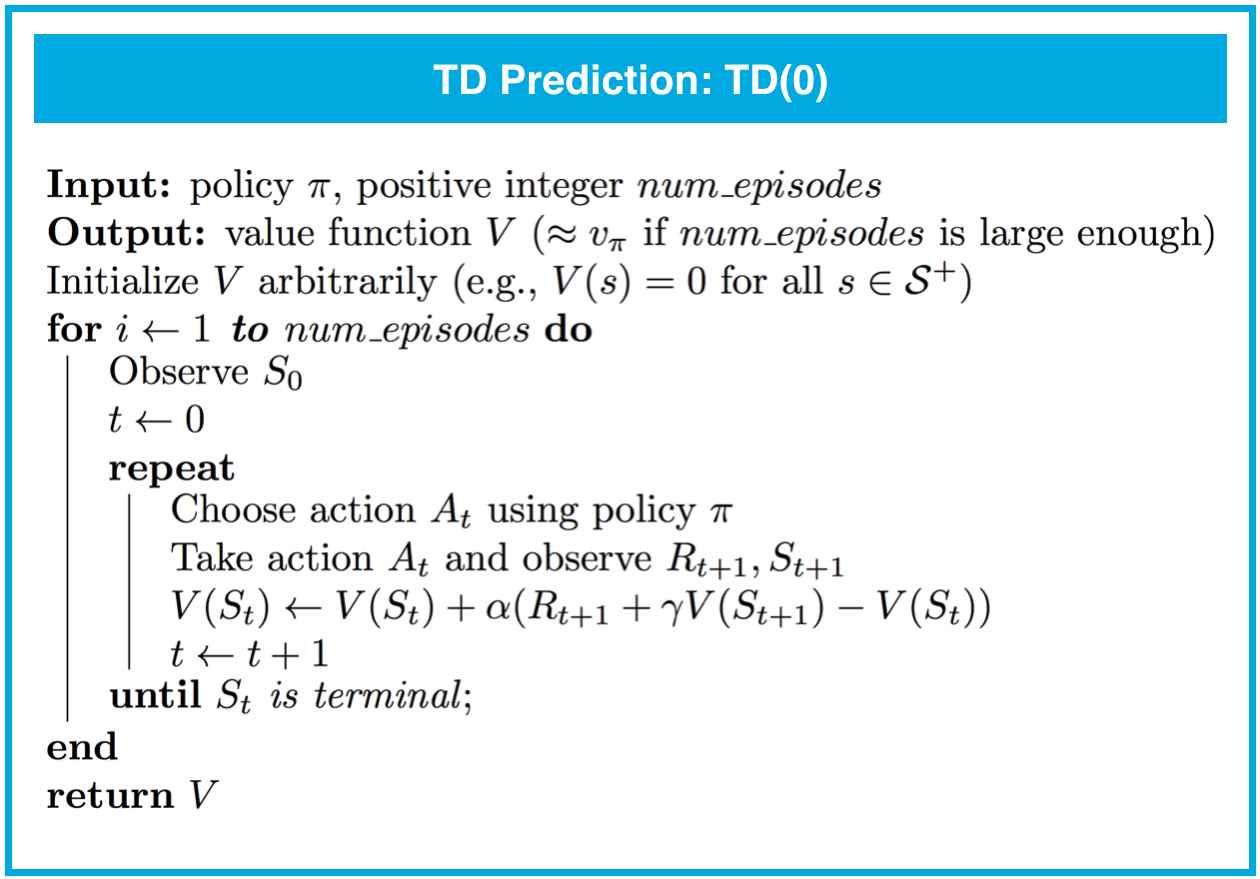

TD Prediction

Both TD and Monte Carlo methods use experience to solve the prediction problem. Given some experience following a policy , both methods update their estimate of for the nonterminal states occurring in that experience.

Roughly speaking, Monte Carlo methods wait until the return following the visit is known, then use that return as a target for . A simple every-visit Monte Carlo method suitable for nonstationary environments is

where is the actual return following time , and is a constant step-size parameter.

Whereas Monte Carlo methods must wait until the end of the episode to determine the increment to (only then is known), TD methods need to wait only until the next time step. At time they immediately form a target and make a useful update using the observed reward and the estimate . The simplest TD method makes the update

immediately on transition to and receiving . In effect, the target for the Monte Carlo update is , whereas the target for the TD update is . This TD method is called TD(0), or one-step TD,

TD(0) is guaranteed to converge to the true state-value function, as long as the step-size parameter is sufficiently small. If you recall, this was also the case for constant- MC prediction. However, TD(0) has some nice advantages:

- Whereas MC prediction must wait until the end of an episode to update the value function estimate, TD prediction methods update the value function after every time step. Similarly, TD prediction methods work for continuous and episodic tasks, while MC prediction can only be applied to episodic tasks.

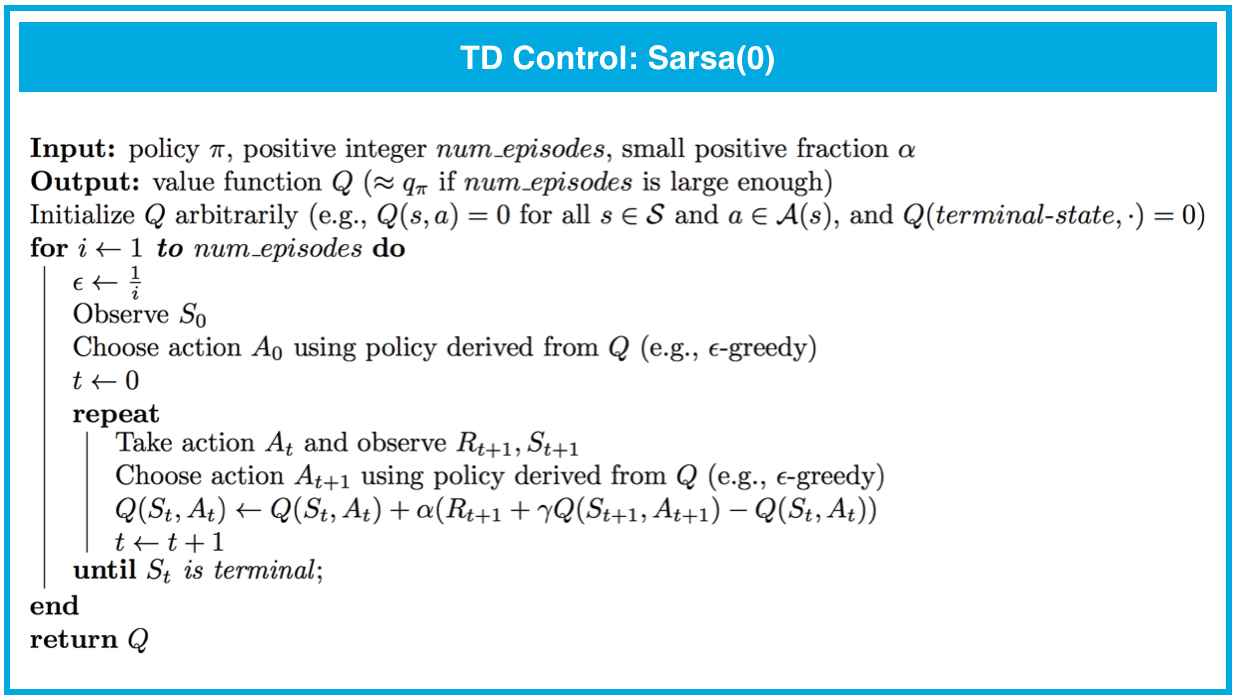

Sarsa

Sarsa(0) is guaranteed to converge to the optimal action-value function, as long as the step-size parameter is sufficiently small, and the Greedy in the Limit with Infinite Exploration (GLIE) conditions are met. Although there are many ways to satisfy the GLIE conditions, one method involves gradually decaying the value of when constructing -greedy policies.

In particular, let correspond to the -th time step. Then, if we set such that: - for all time steps , and - decays to zero in the limit as the time step approaches infinity (that is, ),

then the algorithm is guaranteed to yield a good estimate for , as long as we run the algorithm for long enough. A corresponding optimal policy can then be quickly obtained by setting for all .

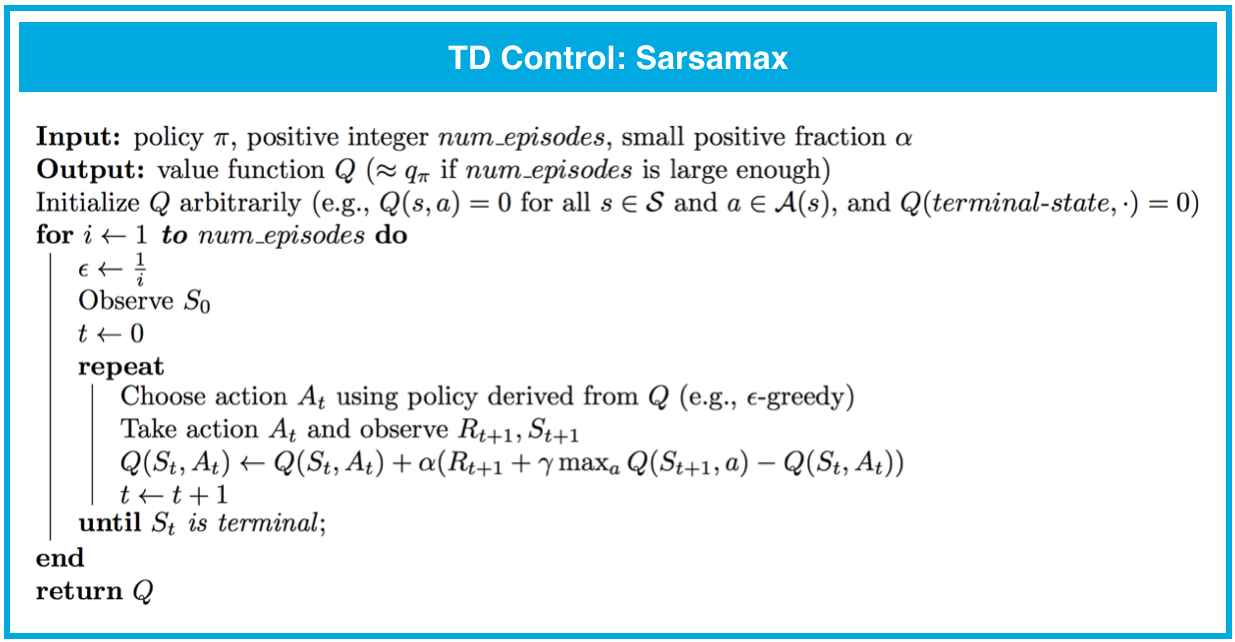

Sarsamax

Sarsamax is guaranteed to converge under the same conditions that guarantee convergence of Sarsa.

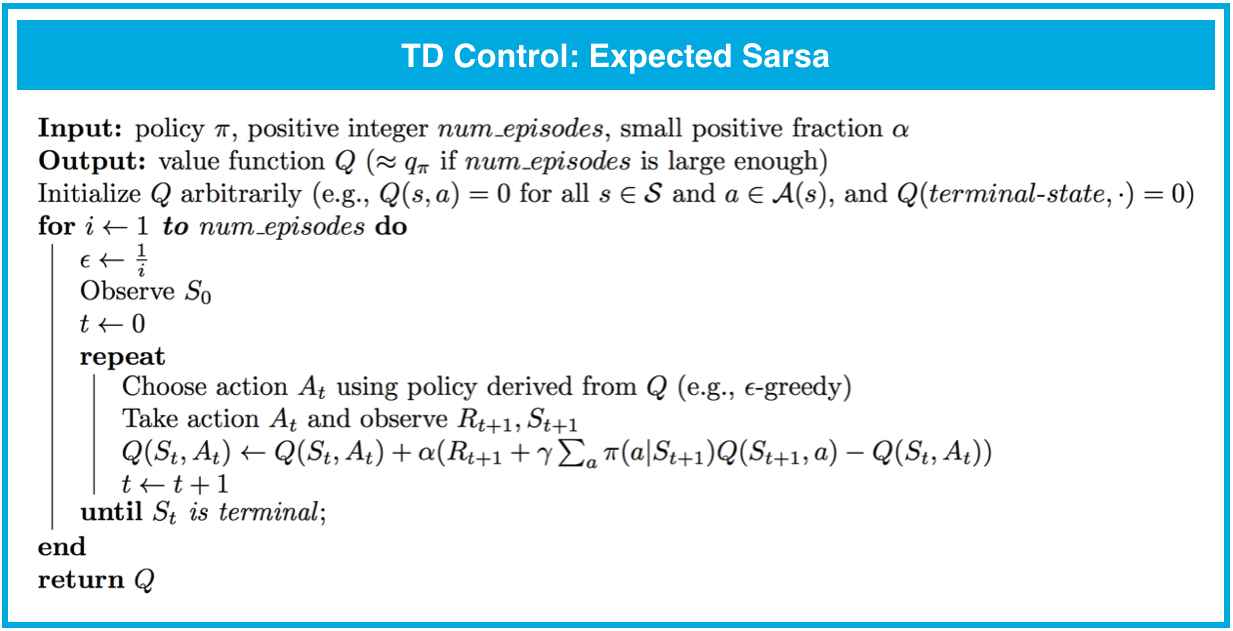

Expected Sarsa

Expected Sarsa is guaranteed to converge under the same conditions that guarantee convergence of Sarsa and Sarsamax.

Remember that theoretically, the as long as the step-size parameter is sufficiently small, and the Greedy in the Limit with Infinite Exploration (GLIE) conditions are met, the agent is guaranteed to eventually discover the optimal action-value function (and an associated optimal policy). However, in practice, for all of the algorithms we have discussed, it is common to completely ignore these conditions and still discover an optimal policy. You can see an example of this in the solution notebook.

Analyzing Performance

All of the TD control algorithms we have examined (Sarsa, Sarsamax, Expected Sarsa) converge to the optimal action-value function (and so yield the optimal policy ) if (1) the value of decays in accordance with the GLIE conditions, and (2) the step-size parameter is sufficiently small.

The differences between these algorithms are summarized below:

- Sarsa and Expected Sarsa are both on-policy TD control algorithms. In this case, the same (-greedy) policy that is evaluated and improved is also used to select actions.

- Sarsamax is an off-policy method, where the (greedy) policy that is evaluated and improved is different from the (-greedy) policy that is used to select actions

- On-policy TD control methods (like Expected Sarsa and Sarsa) have better online performance than off-policy TD control methods (like Sarsamax).

- Expected Sarsa generally achieves better performance than Sarsa.